The challenges:

- Determining in which stages of development or deployment of technology to engage with machine learning, using a just war logic

- Choosing to closely engage with a military project as a critical scholar, given that the use of the project may result in the loss of human life

The background and policy setting:

John R. Emery is a scholar who works on the intersection of technology, international security, and the ethics of war. His recent work focuses on issues of how emotion, abstraction, and human-machine interaction effect ethical decision-making in war. His work in Critical Military Studies analyzed the utilization of collateral damage estimation algorithms (also known as “bugsplat”) by the United States Air Force (USAF) and how its practical applications diverged from how the software was originally written. (The term bugsplat itself originally referred to the software that depicted blast patterns of air-to-surface weapons, that left a 2-dimensonal blast that looked like a bug splattered on a car windshield. That later evolved in popular discourse to dehumanize and casually refer to civilian deaths in strikes as bugsplat. Furthermore, it led to art installations in Pakistan “Not a Bugsplat”). This case study has important implications for both accountability for killing and the future of a more AI-enabled battlefield by analyzing how automation bias can occur in military settings. One of the fundamental flaws of the bugsplat algorithm was that it never considered the empirical number of civilian casualties when generating an output of a probability of collateral damage; it relied on wholly theoretical data. Moreover, the program was designed to skew toward a larger munition size to ensure target destruction, rather than smaller munition size to protect civilian life, which had become the public discourse surrounding the software. Other research published in Peace Review and Ethics & International Affairs has looked at the issues of technological-determinism and how it seeks to solve ethical-political dilemmas with technological problems-to-be-solved.

The policy setting was consulting with computer scientists and engineers who were tasked with writing machine learning (ML) algorithms with contracts from the USAF and Department of Defense (DOD). His role was peripheral, but he was an interlocutor with computer scientists, raising issues of the psychology of human-machine interaction to open a discussion between the designers of the software and its possible implications when implemented in the field. The goal was to identify the possible choke points of how seemingly obvious outputs from a computer science perspective may be interpreted by USAF personnel in the moment or under conditions of uncertainty. While he is unable to discuss any concrete details of the project itself the broader issues of the capabilities of ML and their interpretations by a human in-the-loop or on-the-loop systems and the ethical ramifications of were discussed with those in the process writing the programs.

The primary concern from his standpoint was the ethics of engagement in the first instance. Although his role was extremely marginal in the process, he considered, what ethics guide him in engaging on these issues that could possibly be involved in taking lives one day? This reflection is something that anyone who works within the military and defense industry ought to grapple with and many indeed do. Ethical discussions remain at the forefront of the deployment of AI and ML in war so there is an openness to ethical concerns that may not have been there historically. This can often be a realm of self-justification and making ethical arguments that justify killing in ways that would go beyond his ethical comfort zone. However, what he found with this particular team was an acute awareness of the potential pitfalls and an emphasis of the limitations of any applications program beyond the data it has been trained on. Having studied historic cases of ethical arguments from nuclear weapons to smart bombs, drones, and lethal autonomous weapons systems, Emery had an in depth understanding of the humanitarian discourses that often accompany the killing of innocents in wars especially in the U.S. context. Thus, he was faced with the ethical dilemma of engagement in the first place. How did this affect his moral compass? What are the moral dangers of engaging with something that one is inherently skeptical of? Many critical scholars would critique from the outside and not engage with policymakers or consult on military matters in the design, implementation, or use of new systems of war. He respects that position, and it is one that he grappled with again and again. He ultimately, however, saw it as essential to engage with those who were initially writing the programs who may be unfamiliar with how their programs could be utilized and mis/interpreted in the military context. He felt his engagement could help those who may be unfamiliar with the Law of Armed Conflict (LOAC) or the ethics of war as well as those unfamiliar with how military culture may impact human-machine interaction in deployment to understand tensions they would otherwise miss. Ultimately, utilizing a framework of Aristotelian practical judgment and weighing the context of the particularity of this engagement, Emery decided to offer his consultation in a limited manner more as an interdisciplinary conversation as opposed to working in depth on unknown algorithms.

In the end, he decided that the most ethical course of action would be to engage from the outset on the ground level of those writing the ML programs that would be utilized by the USAF so that they were aware of potential pitfalls from the outset and could be cognizant of that in their development. He ultimately does not know how these programs may be utilized, but it may include everything from identifying weather patterns to possible target identification, or perhaps even weapons targeting with a human in-the-loop. Nevertheless, the reason that he engages with the just war tradition the way that he does rather than rejecting it outright is that it provides a common language between policymakers, military personnel, theologians, and academics to discuss and debate the ethics of war and reflect upon what it means to fight wars discriminately, proportionally, and with ethical due care, and when wars may be just or unjust.

The engagement:

Emery’s role was strictly as an outside consultant on a larger project that involved some type of ML software for the USAF. He viewed his role seriously as someone who could raise issues and examples of what Madeleine Clair Elish termed moral crumple zones or the potential issues that arise in the space between the ML and the human in-the-loop.[1] Drawing upon examples of collateral damage estimation algorithms, civilian aviation, and autonomous vehicles, he was able to convey some of the potential crumple zones for the space between the intended use and the meaning that is created by the user.

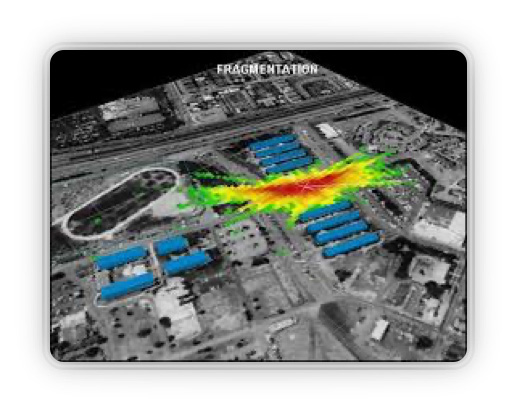

One such example of these moral crumple zones of human-software interactions is highlighted in the case of the “bugsplat” collateral damage estimation tool (CDET). One flaw that he sought to address in contemporary discussions was how there were systemic overestimations of blast patterns by the bugsplat algorithm. Questionable assumptions from data training during the 1990s Iraqi no-fly-zones were coded into subsequent updates of CDET algorithms. As Sarah Sewell notes in her book Chasing Success: the “weaponeering process was designed to underestimate the effects of a weapon (in order to ensure destruction), whereas civilian protection estimates should instead overestimate potential harm so as to understand the outer limits of effects” (emphasis original). Thus, the algorithm was biased to need bigger bombs to ensure target destruction, not smaller bombs to protect civilian life. In other words, the program was designed to suggest larger bombs to destroy targets and later on they added in collateral damage calculations based on population data; the software was designed to kill and not to save, but the discourse surrounding it became that of protecting civilian life. The moral crumple zone of how bugsplat was designed and how it was discussed in the USAF was disconnected from what the computer software was doing.[2]Thus, the design itself has this tendency to underestimate casualties not for any reason other than the training data in the 1990s, i.e., they adapted their existing programs rather than create entire new algorithmic infrastructure to skew bomb sizes smaller to actually protect civilian life. Moreover, if the algorithmic process is focused on blast fragmentation, no matter how sophisticated, in order to make a proportionality calculation it is reliant on accurate population density numbers. Once a war commences any semblance of an ability to accurately measure populations goes out the window. Indeed, the USAF intentionally excluded actual empirical data of civilian casualties to retrain the algorithms with new data. Ultimately, these moral crumple zones often occur in contexts where there can be an automation bias, even in expert users, that assumes the technology possesses capabilities it does not. That is especially important to remember in the military context where these decisions have life and death consequences; both for our soldiers and their civilians.

So, in the ethics of engagement of a scholar of the just war tradition that analyzes the interaction between humans and technology in the context of war, Emery faced a dilemma. Is it better to engage in the early stages of algorithmic development (even in a small capacity) than it is to engage in critique of deployed systems? After much weighing and consideration he chose the former. He believes that it is essential for computer scientists and engineers to understand that the narrow context of ML training data oftentimes can be applied to new and unfamiliar contexts. Hence, they must be cautious in caveating their findings and limitations of the programs. War is not going to disappear, and the USAF is working with some really outdated systems at this time that do not match our capabilities to improve protection of innocents in war. It is necessary to update outdated software built on even worse data and limited understandings of the complexities and environments of the contemporary world. Raising ethical, practical, and pragmatic questions in the design phase allows the computer scientists and engineers to consider these in the programming portion to use their practical judgment for unforeseen or unknown circumstances.

What were the implications of the engagement?

Ultimately, there was an extremely positive reception to the raising of these questions, and it will be a continual ongoing relationship and sounding board to talk through the psychology of human-machine interaction in the military context. The precise nature of the project and the ambiguity that presents him and his own ethical boundaries allows him to comfortably engage on these topics to ideally improve information environments for US soldiers and perhaps even increase civilian protections in war which is a goal of the USAF. There was a strong sense of responsibility and engagement with the ethical issues with the programmers and it was not simply a box to be ticked. Emery was impressed by the level of care taken by the group to ensure that the ML was useful, did what they wanted it to do, and was properly understood and applied. There are never precise or fixed rules when it comes to warfare. The laws and ethics of war are highly contextually bound, and thus fraught with interpretation and ethical practical judgment. AI and ML are often touted as solutions to the ethical-political dilemmas of killing in war, and yet these programs form one set of tools amongst many that decision-makers and soldiers have in interpreting the battlefield. No one solution is a panacea and war should almost always be avoided at all costs. For those of us who study ethics of war, we are both allured and repelled by the extremes of humanity and inhumanity in fighting. Some make the personal choice to critique from the outside while not engaging in the policy world and Emery has the utmost respect for those scholars and their moral decision-making. He has grappled with the dilemmas for some years and has concluded that critical scholars especially should engage where they can, to raise essential practical and ethical concerns as early in the process as they can.

Cited in Neta C. Crawford. (2013) Accountability for Killing: Moral Responsibility for Collateral Damage in America’s Post-9/11 Wars. (New York: Oxford University Press).

[1] Madeleine Claire Elish. (2019). Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction. Engaging Science, Technology, and Society. DOI: 10.17351/ests2019.260

[2] Ibid.